ARTICLE

Chinese AI firms on track to narrow gap despite Nvidia controls

Bloomberg Intelligence

This analysis is by Bloomberg Intelligence Senior Industry Analyst Robert Kea and Bloomberg Intelligence Associate Analyst Jasmine Lyu, with contributing analysis by Charles Shum and Kunjan Sobhani. It appeared first on the Bloomberg Terminal.

China’s AI companies remain on track to further narrow the performance gap with their US peers as earlier concerns about their dwindling access to imported, leading-edge accelerator chips appear largely unfounded. Increased technical innovation, a rising domestic chip supply and a healthy buffer stock of components should ensure China’s AI development remains on track through 2025 and beyond.

US AI chip export controls proved largely ineffectual

Preemptive action by China’s largest tech firms to stock up on Nvidia accelerator chips before US export controls came into force should allow them to continue AI development without significant disruption. The rising, albeit limited, supply of Huawei’s lower-spec domestic accelerator chips should further limit a supply bottleneck. Unconfirmed reports have also suggested some companies have imported restricted hardware via third-party countries to circumvent the controls.

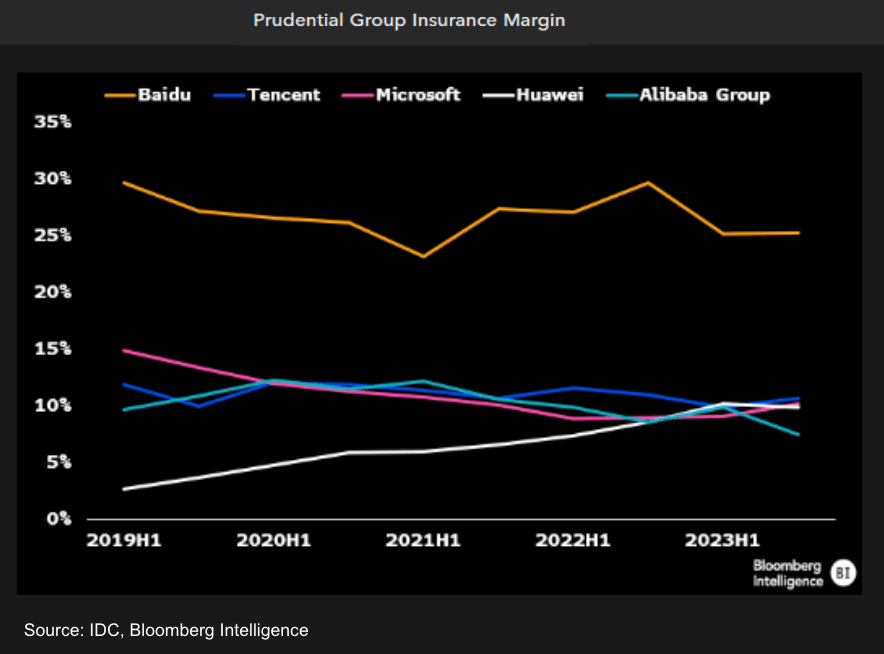

Baidu stated in May 2024 it had enough chips to continue training its ERNIE large language model (LLM) for another one to two years. Tencent said in November 2023 that it had one of the largest inventories of AI chips in China, with sufficient stock — including Nvidia’s H800 — to support several generations of upgrades to its Hunyuan LLM.

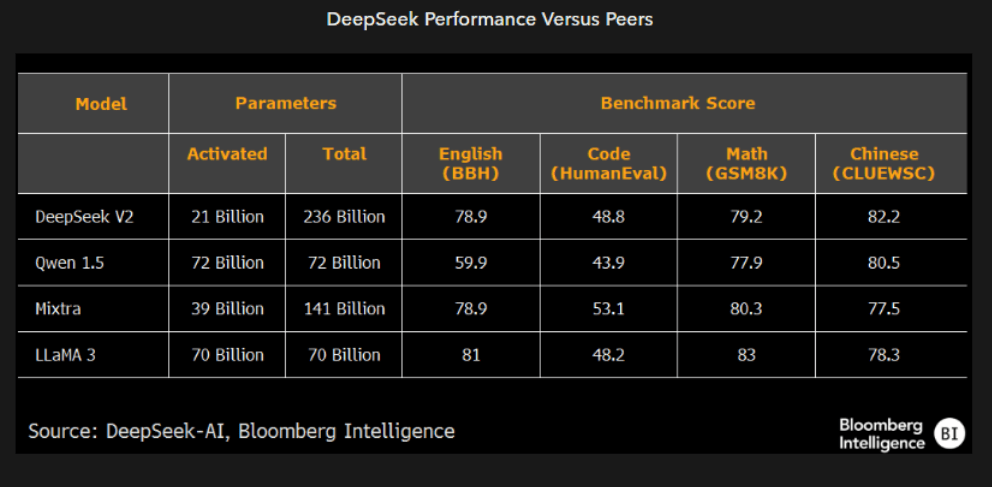

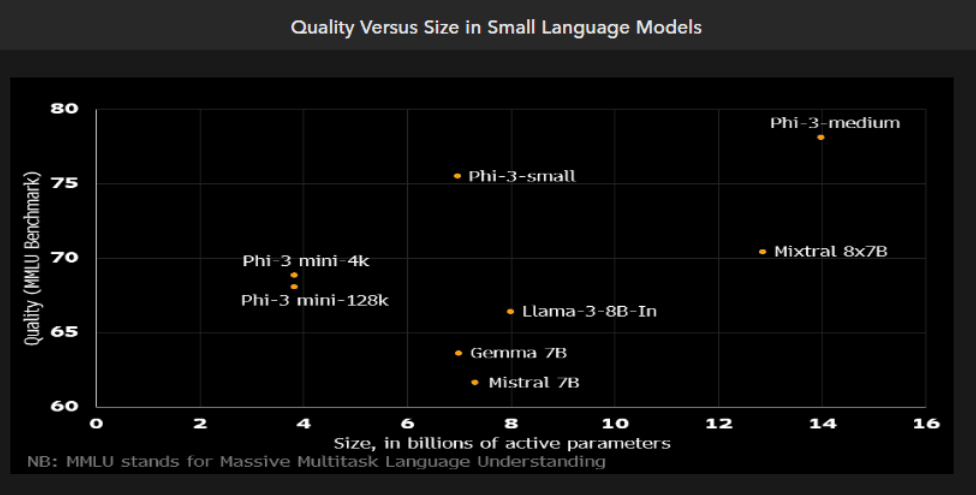

Smaller AI models further mitigate chip controls

Chinese firms are increasingly turning to smaller, narrowly-focused LLMs to further mitigate the impact of US export chip controls. Small models are cheaper to build and are less computationally intensive, making them better suited to run on lower-spec, domestically developed chips. Smaller models also cost less to operate, as they require less time in training and inference. Smaller models also tend to have lower latency, producing superior, faster results within their defined specialization.

Chinese startup firm DeepSeek pioneered the use of highly efficient “mixture of experts” (MOE) LLM architecture, resulting in a material reduction in computational demand during pre-training and inference. Deepseek says its approach yielded a 43% reduction in training costs, boosting the model’s maximum generation throughput 5.8x.

Shift toward smaller AI models is a growing industry trend

The adoption of smaller, targeted LLMs is a growing global trend, driven by the rising need for firms to reduce costs. OpenAI, for example, launched GTP-4o in July 2024, its most cost-efficient small model to date. Chinese companies have taken a similar approach, led by startup DeepSeek, which has emerged as a leading sector innovator.

DeepSeek’s “Mixture of Experts” (MOE) utilizes a sub-network of specialized “expert” models to jointly perform a task. By only activating the specific experts needed to complete each task, MOEs deliver faster results with substantial cost and computational savings versus earlier generation, more-generalist LLMs. DeepSeek’s 236 billion parameter V2 model consists of 160 experts (plus two shared experts), activating just six experts (i.e. 21 billion parameters) during inference.

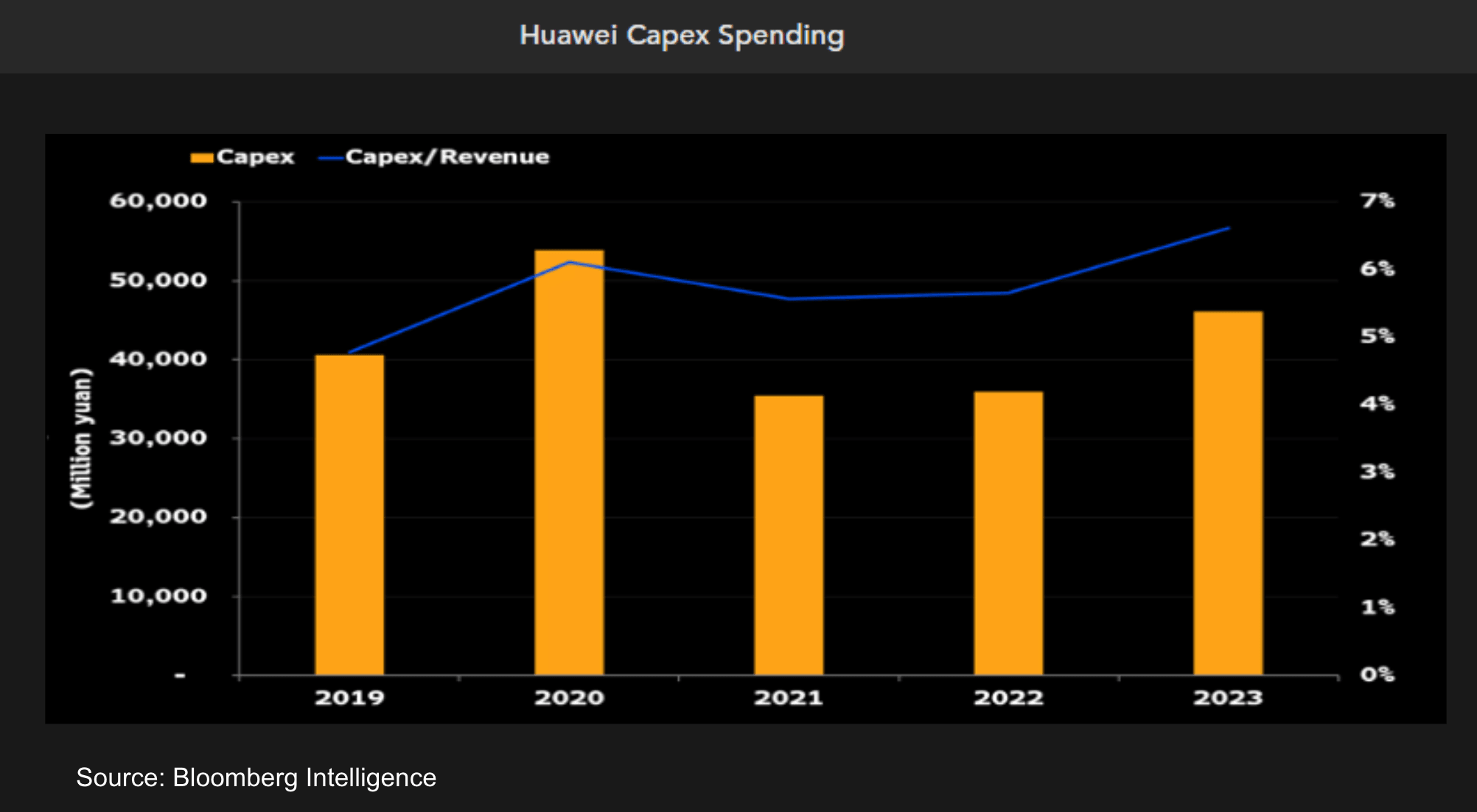

Huawei set to benefit from US chip embargo

Domestic chip supplier Huawei has been a primary beneficiary of the US export controls, which have boosted demand for its AI accelerator chips, including the Ascend 910B. That chip was marketed in China as a replacement for Nvidia’s H100, which previously dominated the market. Though Huawei’s chips are supply-constrained — reflecting the low production yields on its 7nm fabrication process — the rising, incremental supply of domestically fabricated chips has helped alleviate the impact of the US export controls.

Though the performance of Huawei’s AI components lags Nvidia’s by two to three generations, SemiAnalysis believes Huawei’s forthcoming accelerator, the Ascend 910C, which begins shipping in October, could outperform Nvidia’s planned China-specific H20 AI accelerator chip.

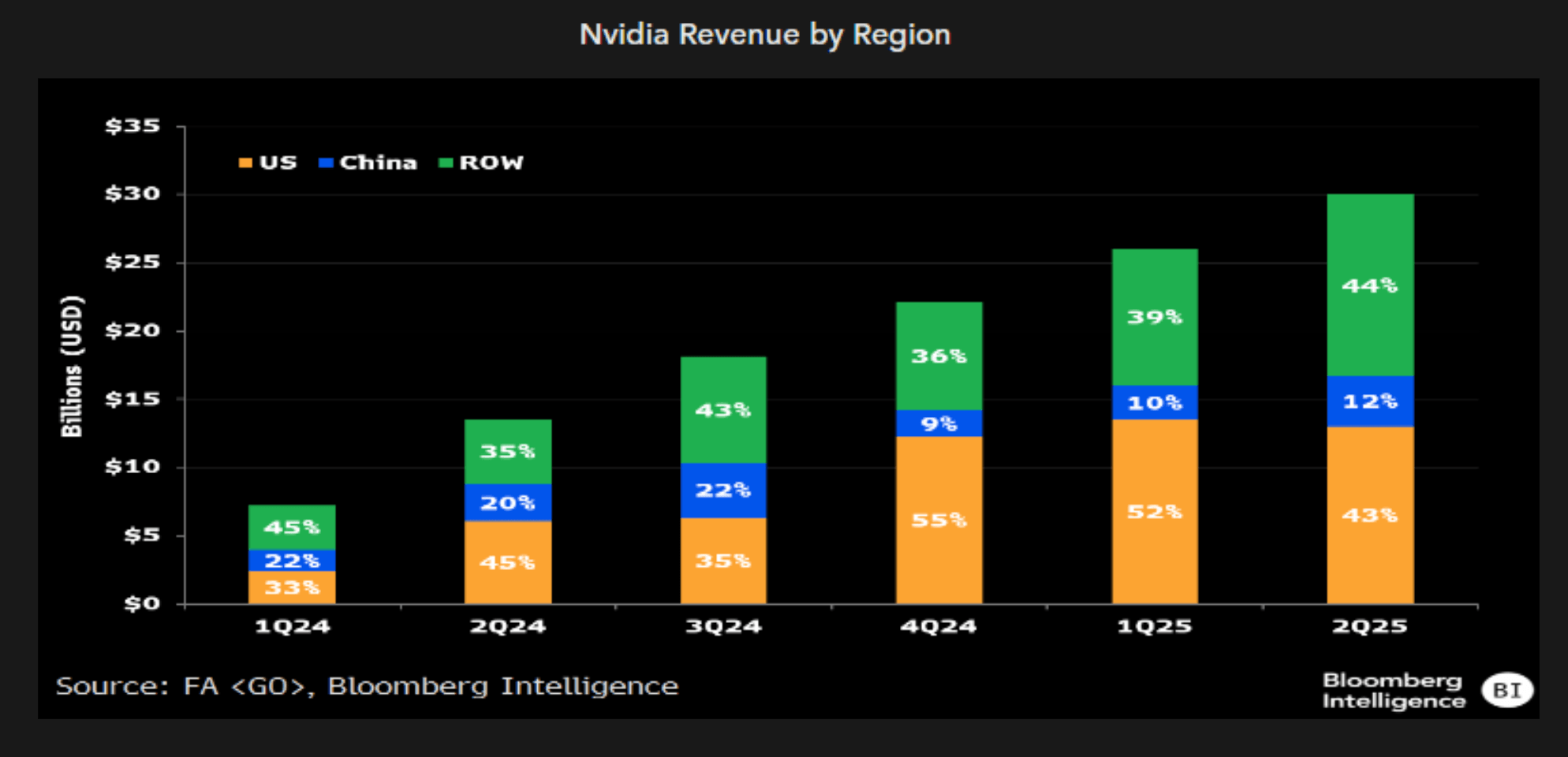

Geopolitics will drive Chinese AI firms to buy domestic chips

Rising geopolitical tensions, combined with growing fears about a further tightening of US export controls will likely drive China’s AI firms toward domestic suppliers in the long run and away from Nvidia and other US suppliers. However, there should still be a healthy appetite for Nvidia’s forthcoming, China-specific H20 chip — particularly from smaller firms — given the current overall supply constraints in the market.

Huawei has been testing its new Ascend 910C with key players in China’s tech sector, including ByteDance, Baidu, and China Mobile, according to the WSJ. Initial orders for the new chip could potentially reach 70,000 units, according to the report. The total value of these orders is estimated at around $2 billion, suggesting a price of approximately $28,000 per chip.